Want to add some cutting-edge AI magic to your code? That's right, we're talking about integrating ChatGPT - the powerhouse of large language models - into your Python scripts. With ChatGPT, your code will be able to understand natural language and generate human-like responses, revolutionizing the way users interact with your applications.

How to use ChatGPT in a Python script

To use the ChatGPT language model in a Python script, you’ll make use of the OpenAI Python library. Here are the steps to follow:

- First, sign up for OpenAI API access at https://beta.openai.com/signup/ to get an API key.

-

Use pip to install the Python OpenAI API client library by entering the following line in the terminal:

pip install openai - Create an environment variable named OPENAI_API_KEY and put your API key as its value to configure your API key.

-

Add the next line to your Python code to import the OpenAI API client:

import openai -

Initialize the OpenAI API client by adding the following lines to your Python code:

openai.api_key = "YOUR_API_KEY_HERE" model_engine = "gpt-3.5-turbo" # This specifies which GPT model to use, as there are several models available, each with different capabilities and performance characteristics. -

Now call the openai.Completion.create() function to generate text using the ChatGPT language model. Here's an example of how to generate a response to a given prompt. Note there is an initial "system" prompt, followed by the user's question:

response = openai.ChatCompletion.create( model='gpt-3.5-turbo', messages=[ {"role": "system", "content": "You are a helpful assistant."}, {"role": "user", "content": "Hello, ChatGPT!"}, ]) message = response.choices[0]['message'] print("{}: {}".format(message['role'], message['content']))

Output

assistant: Hello! How can I assist you today?

Awesome, right? Let’s take a look at another example on how to integrate ChatGPT into Python, this time using more parameters.

How to use ChatGPT API parameters

In the below example, more parameters are added to openai.ChatCompletion.create() to generate a response. Here’s what each means:

- The

engineparameter specifies which language model to use (“text-davinci-002” is the most powerful GPT-3 model at the time of writing) - The

promptparameter is the text prompt to generate a response to - The

max_tokensparameter sets the maximum number of tokens (words) that the model should generate - The

temperatureparameter controls the level of randomness in the generated text - The

stopparameter can be used to specify one or more strings that should be used to indicate the end of the generated text - If you want to generate multiple responses, you can set

nto the number of responses you want returned

The strip() method removes any leading and trailing spaces from the text.

response = openai.ChatCompletion.create(

model='gpt-3.5-turbo',

n=1,

messages=[

{"role": "system", "content": "You are a helpful assistant with exciting, interesting things to say."},

{"role": "user", "content": "Hello, how are you?"},

])

message = response.choices[0]['message']

print("{}: {}".format(message['role'], message['content']))

The generated text is returned in the choices field of the response, which is a list of objects that contain the "role" (assistant or user) and "content" (the generated text). In this example, we only requested one response so we just get the first item in the choices list and print the role and generated text.

Output

assistant: Hello! I am just a computer program, but I am functioning properly and ready to help. How can I assist you today?

It’s important to note that although ChatGPT is magical, it does not have human-level intelligence. Responses shown to your users should always be properly vetted and tested before being used in a production context. Don’t expect ChatGPT to understand the physical world, use logic, be good at math, or check facts.

Handling errors from the ChatGPT API

It's a best practice to monitor exceptions that occur when interacting with any external API. For example, the API might be temporarily unavailable, or the expected parameters or response format may have changed and you might need to update your code, and your code should be the thing to tell you about this. Here's how to do it with Rollbar:

import rollbar

rollbar.init('your_rollbar_access_token', 'testenv')

def ask_chatgpt(question):

response = openai.ChatCompletion.create(

model='gpt-3.5-turbo',

n=1,

messages=[

{"role": "system", "content": "You are a helpful assistant with exciting, interesting things to say."},

{"role": "user", "content": question},

])

message = response.choices[0]['message']

return message['content']

try:

print(ask_chatgpt("Hello, how are you?"))

except Exception as e:

# monitor exception using Rollbar

rollbar.report_exc_info()

print("Error asking ChatGPT", e)

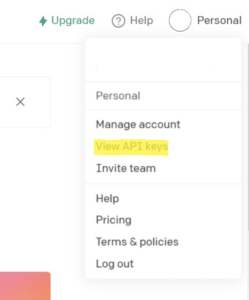

To get your Rollbar access token, sign up for free and follow the instructions for Python.

Next steps

We can't wait to see what you build with ChatGPT. Happy coding!